// itp

The camera will prove to be the ancestor of all those apparatuses that are in the process of robotizing all aspects of our lives, from one’s most public acts to one’s innermost thoughts, feelings and desires. — Vilém Flusser, 1983.

The history of the United States is a technological one. Photography’s earliest proliferation coincided with the Civil War and gained its formal language as government funded exploratory expeditions expanding the rail roads (and land rights) to the Pacific. Images have been used to classify, quantify and construct a nation: its people as well as places. That information, became the barometer that continually justified incongruous practices and ideologies that have maintained power structures.

Three days ago, the iPhone turned a decade old as we enter a cultural landscape vastly different than the one we left behind in 2006. Connected devices allow us to take part in mass media in real time, from sharing a momentary thought to igniting societal change. By engaging with these tools, we are all journalists now – sharing our stories as we explore our world(s). And, as Diamond Reynolds taught us on July 6th of last year: while these technologies attempt to obfuscate the powers of societal production, they are also laying bare the realities.

Every photograph is in fact a means of testing, confirming and constructing a total view of reality. Hence the crucial role of photography in ideological struggle. Hence the necessity of our understanding a weapon which we can use and which can be used against us. – John Berger, 1974

The rate at which emergent technologies have changed contemporary society is astonishing and somewhat bewildering. Bewildering, for those old enough to remember a before, and bewildering for those young enough to assume the speed and weight of this communication is inhabitable (re: normal). If it is possible to use the first-person plural at all in a meaningful way, we no longer fully have a grasp on what is ours. A massive chasm has emerged and in it we have cast everything we once held most dear: our realtionships, our thoughts, ourselves.

Our homes and communities are no longer the locus of our private lives. A picture of a loved one on a desk was once the extent of personal information brought into the office as an intimate conversation was allocated to one of the few rooms of our home with a telephone. Our devices encourage us to bring our internal psychic and emotional space everywhere we go and provide the opportunity to broadcast that space everywhere we are not. Our preferences and interests were once observed by looking at our libraries (our books, CDs, VHS tapes, tool kits, etc.) but now we carry them around, informing platforms our momentary decisions.

Every individual is both consciously writing and naively generating a massive amount of information making their geographic location, likes, conversations and private actions available anytime and anywhere to telecom providers, search engines, social networks and other eager open ports. Tethered to our devices, our bodies are now the starting and vanishing point of mass surveillance and big data.

I’m not on the outside looking in. I’m not on the inside looking out. I’m in the dead ducking center looking around. — Kendrick Lamar & iOS Auto Correct

Over the second half of last semester, I became totally obsessed with 360 video streaming: trying to get the Rioch Theta to stream in WebVR. I got close, but ultimately failed because it was hard. But through all this I did circle back around to what I’m actually interested in: phones. The powerful computers we don’t think of as computers but carry around with us all the time. In my mind the Theta is basically a phone – a small powerful device with a camera on both sides of the sensor plane. It is a machine that suggests the possibility of 360 filmmaking but there are many other concepts implicit and technologies embedded in a mobile device. I think I got distracted by this damn VR zeitgeist. But that’s okay.

So instead, I made this: Channel 9, for Live Web and Actual Fact with Tahir Hemphill. Using the Hip Hop Data Base, I found all the Kendrick Lamar songs that had the word “camera” or “Channel 9,” somewhere in the lyrics. Channel 9 is the a local television station Lamar mentions often in his work and is also the Microsoft developers site, named after the channel airline pilots would allow passengers to listen in on cockpit conversations (We the Media, Dan Gilmore, p. 75).

The page automatically reloads if the lyric is longer than the window, and a new phrase is randomly selected. If you click the canvas, the text turns green and clicking the black lens icon will save the current state of the canvas to your local machine. The program is designed for mobile – using the accelerometer, if a viewer shakes their device the animation will stop and the words stand still. But because iOS doesn’t allow certain JavaScript functionality, the saveCanvas (or the “click” to take a picture) only works on Android or on a computer.

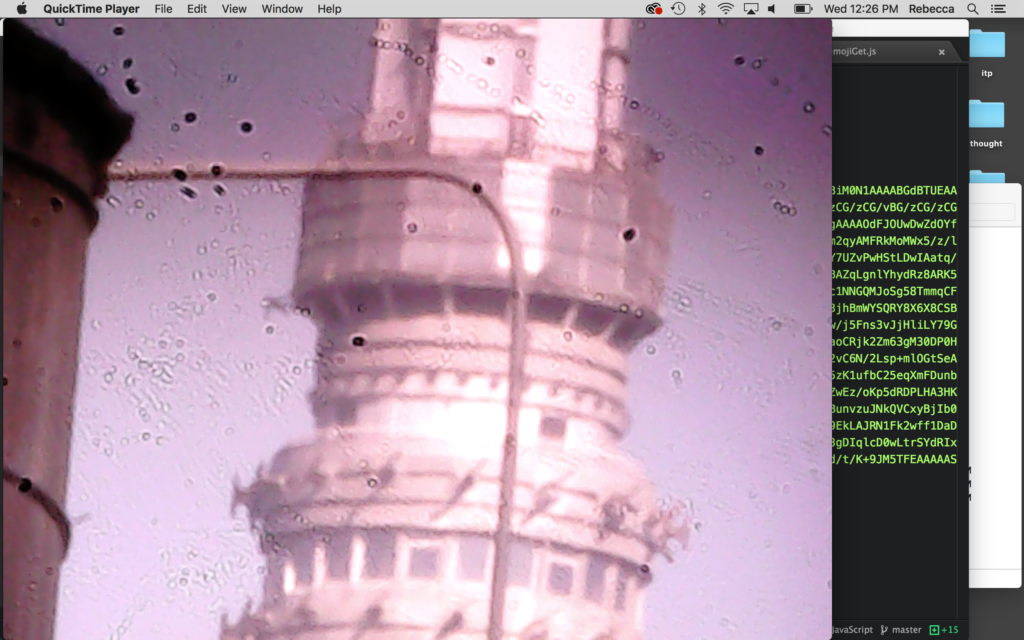

In Digital Imaging Reset, Eric gave us a small webcam to play with. Our challenge was/is to take the sensor and construct our own camera around it. I thought it would be fun to quick & dirty a digital camera that writes to the browser using my Zone VI large format camera & some basic code from Live Web. The marks are dust on the sensor. Even at my most formalist moments, I’ve always been partial to dust, cords & tripod legs in my images as a nod to the means of (photographic) production, or whatever.

I removed the sensor circuit board from the camera and fashioned a cardboard film holder.

I had some initial trouble knowing what I was looking at. Because the focal lengths for the lenses are calibrated to throw an image that covers a 5 inch by 4 inch space, the micro inch of the sensor plane only captures a fraction of what one would normally see on the ground glass and at great magnification. Using the camera and 210mm lens Eric was able to make this streaming video image of the Empire State Building antenna from the window of his office.

Because of the bot conference on Friday, I took the rig up to the 9th floor and made some more tests of the water tower and construction cranes.

the magnification the camera movements are extremely sensitive, fractions of inches resulting in changes of hundreds of feet. My next step is to figure out a smaller format camera that is easier to work with. Rather than this overly-complicated telescope. But I’m pleased with this proof of concept. Once I get an easier rig set up I can start figuring out what to do with it…

I will hopefully blog more fully about this project tmrrw. It’s still in process. Here is the information on Github. initial prototype.

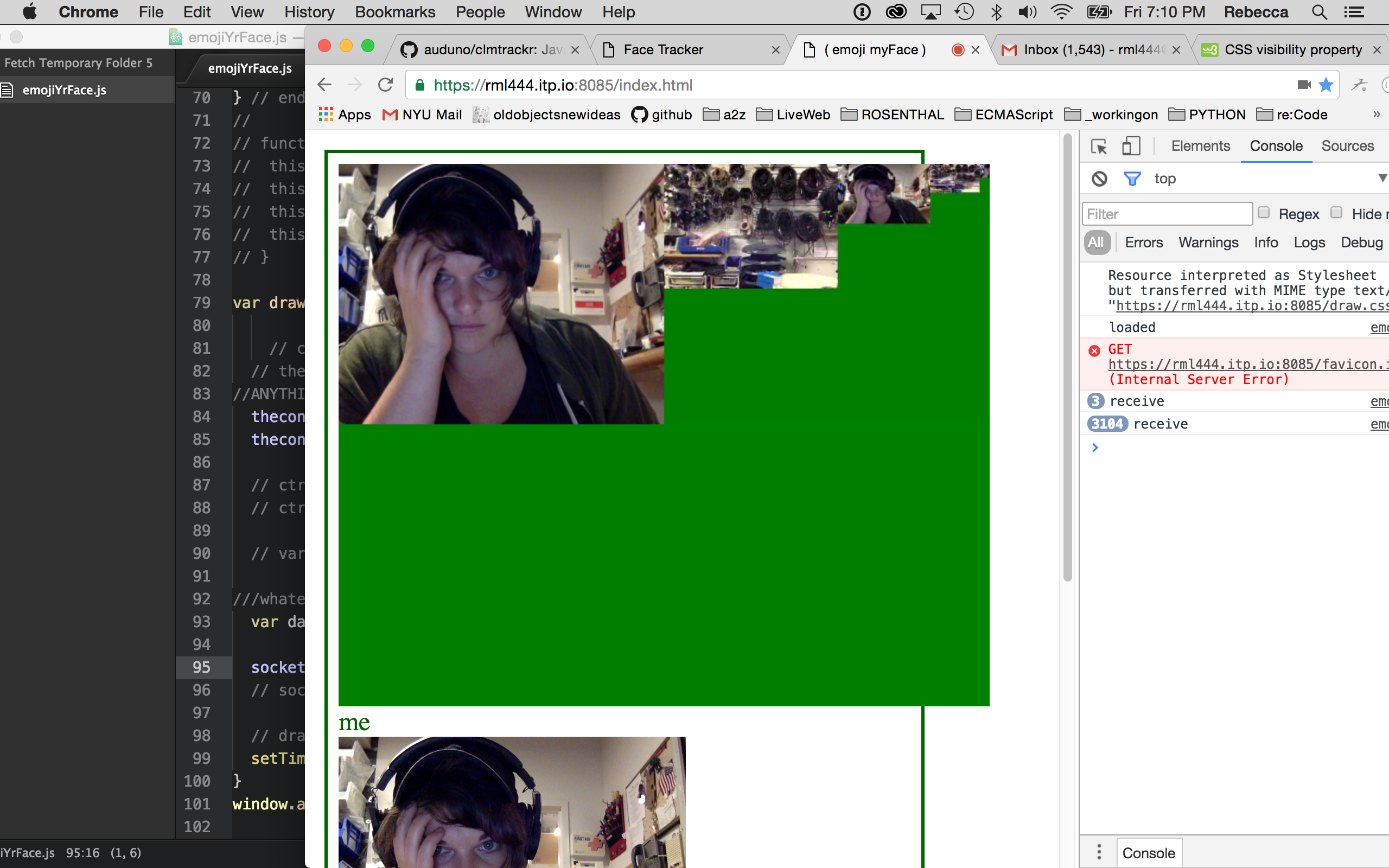

At the Stupid Hackathon last year some friends of mine made: Potato Your Face . I just had the idea to do: Emoji Yr Face, which is what I intend to do.

Still reading this book . The author included a Jack Spicer poem I had forgotten about. Author’s excerpt below, full poem here.

I would like to make poems out of real objects. The lemon to be a lemon that the reader could cut or squeeze or taste–a real lemon like a newspaper in a collage is a real newspaper. I would like the moon in my poems to be a real moon, one which could be suddenly covered with a cloud that has nothing to do with the poem–a moon utterly independent of images. The imagination pictures the real. I would like to point to the real, disclose it, to make a poem that has no sound in it but the pointing of a finger…

Things do not connect; they correspond. That is what makes it possible for a poet to translate real objects, to bring them across language as easily as he can bring across time. That tree you saw in Spain is a tree I could never have seen in California, that lemon has a different smell and a different taste, BUT the answer is this–every place and every time has a real object to correspond with your real object – that lemon may become this lemon, or it may even become this piece of seaweed, or this particular color of gray in this ocean. One does not need to imagine that lemon; one needs to discover it.

Fredman p. 97 // Spicer p. 133

Emojis are very interesting to me. Though we see them as an image, the computer understands them as a string. Taking up pixel real estate on our screens, they only occupy text space in our parsers. How neat!

Through use language changes over time. And people ❤️ communicating with images. Various corporations pay the Unicode Consortium $20,000 a year in order to make new emojis for consumer use. In this way these companies are defining how people are communicating. It’s like they get to pay to put new words into the dictionary, or something.

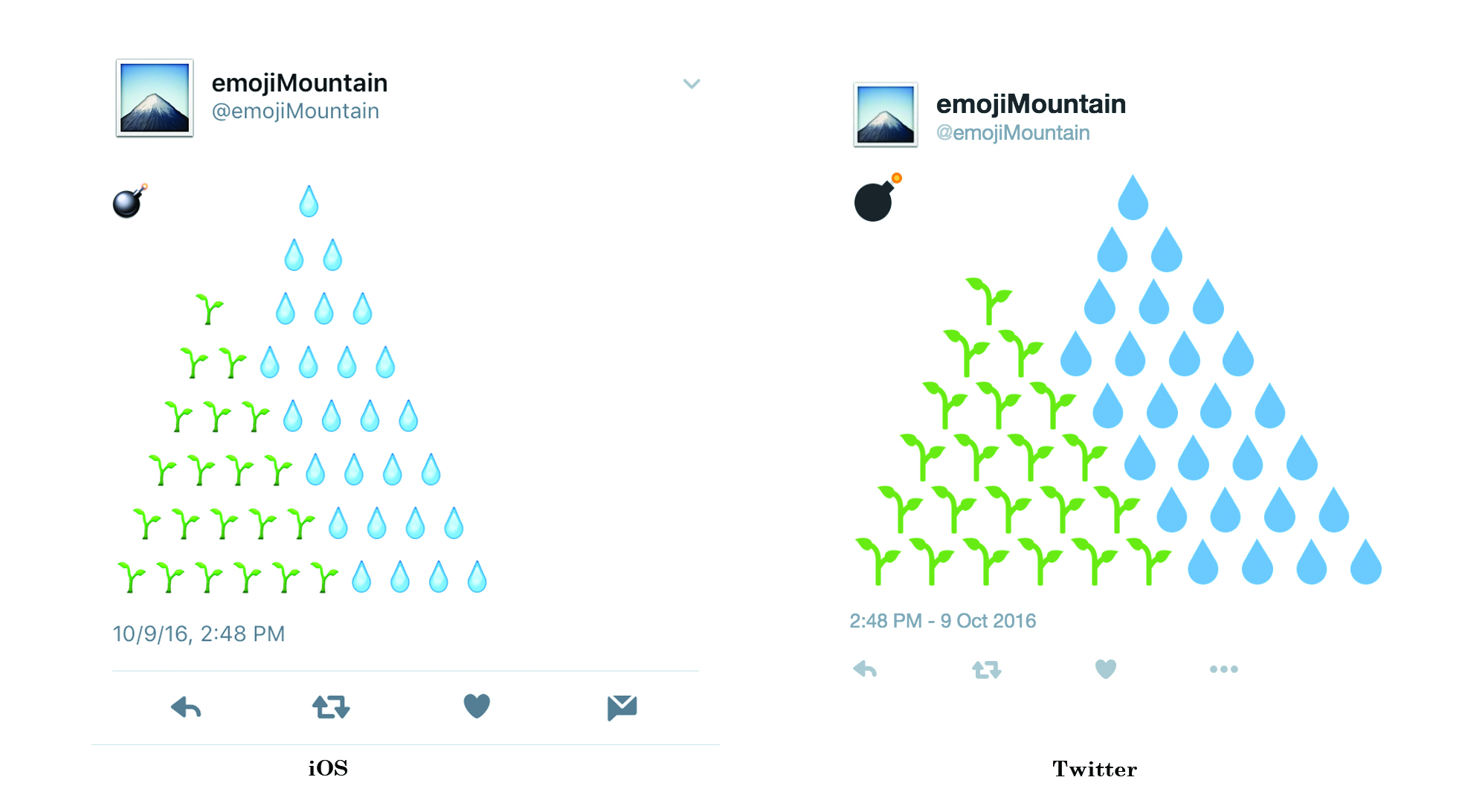

@emojiMountain is a twitter bot that tweets an emojiPainting of some mountains & likes the tweet of someone who uses the word “mountain”, “🗻” or the “🌊” emoji. The input changes every time the program is run.

The emoji data is currently hardcoded as I ran into issues with my json file. For a next step I would like to write a function that varies the arrangement of the emojis to appear like different mountain ranges as well as write something that will more systematically move through the emoji arrays.

Now, looking at the likes. At first all the bot did was retweet the user’s tweet but I like the relationship between generating and aggregating content. I need to scale down how often the bot runs so it becomes clear which tweet is related to which like.

I am particularly interested in writing out parts of the script that filter the Twitter data. I am interested when people choose to use written words, an emoji or post a picture.

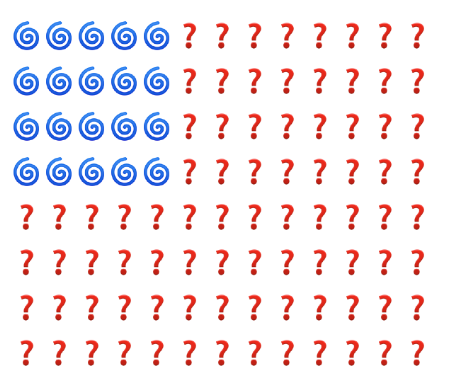

I have a really hard time with election years. All the uncertainty, speeches, opinions, oppression, monies: I get nervous, cranky and depressed. This year – this moment in history seems particularly poised for an even more dynamic emo rollercoaster. So to make it through the next six months (and beyond, eek) – I made a twitter bot.

It might not be a solution to encroaching xenophobia, unjustified military action, economic instability, the prison-industrial complex, crumbling educational systems, depleting natural resources and the general impeding doom we face every day. But – I’m trying to learn how to program. So I made it for more smiles sake and to see what my computer makes of all this.

It’s a simple python program. Here is the code on GitHub. Using my own computational capacities, I sorted the emojis from this enormously helpful cheat sheet into two categories. I put all the icons that were mostly blue or mostly red into two lists. At first, it was all whales and hearts. So I loosened the definition of “mostly red/blue” to allow for a diversity of emojis. The script picks one blue and one red emoji at random and prints them out in formation that looks somewhat like the American Flag .

A short works cited because I can not stop thinking them:

They Dream Only of America – John Ashbery